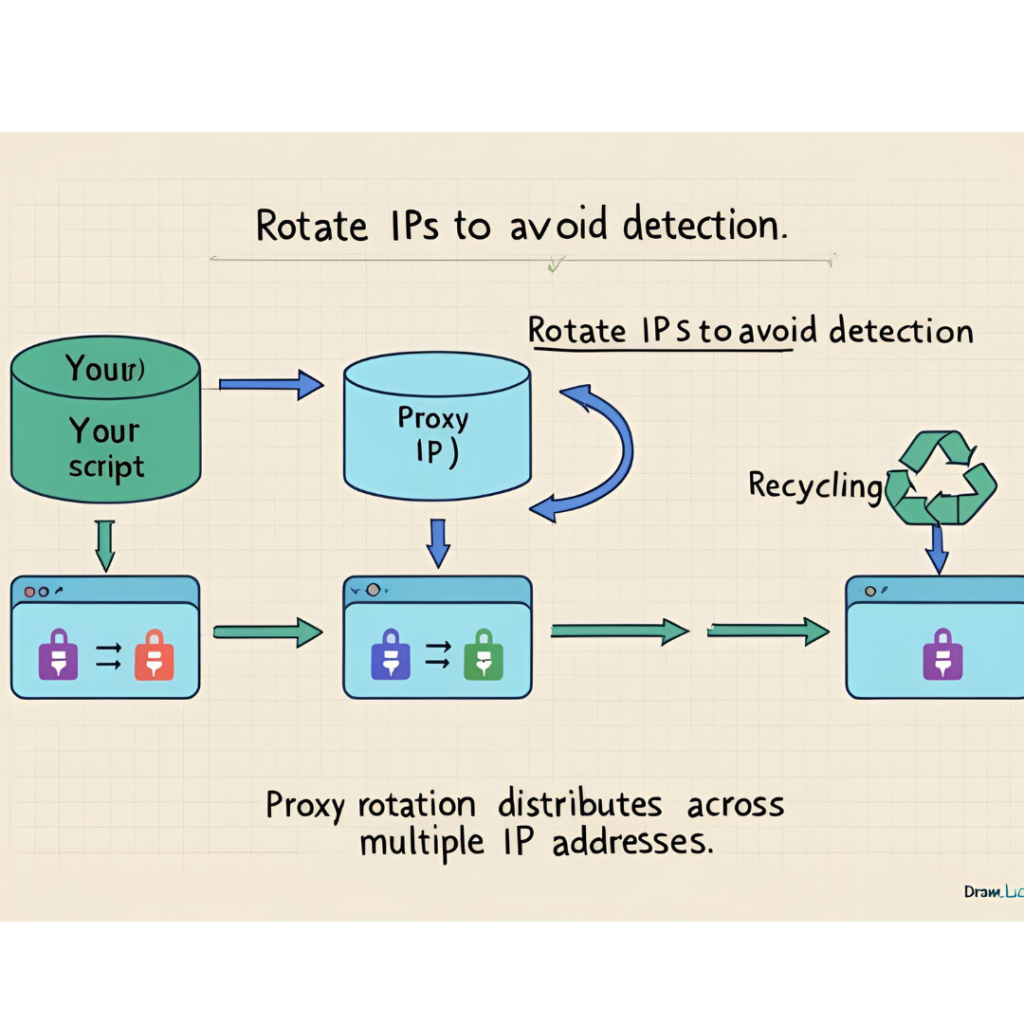

If you’ve ever tried scraping a website at scale, you’ve probably hit a roadblock: IP bans. Websites block suspicious traffic, and if you send too many requests from the same IP, you’ll get blacklisted.That’s where proxy servers come in. They mask your real IP, distribute requests across multiple addresses, and help you scrape without getting blocked. Looking for a reliable free proxy lists? While paid options offer stability, free proxies can work for small projects—if you know where to find the best ones.

⚠️ Important Warning About Free Proxies

Before we dive in, be aware:

🚨 Free proxies are risky. Many are slow, unreliable, or even malicious.

🚨 Use them cautiously—avoid logging into sensitive accounts.

🚨 For serious projects, consider paid proxies (like Bright Data or Smartproxy).

Now, let’s explore the best free proxy sources.

The 5 Best Free Proxy Lists for Web Scraping

1. FreeProxy.World

Why It’s Great:

✅ Updated daily with fresh proxies

✅ Filters by country, speed, and anonymity level

✅ Supports HTTP, HTTPS, and SOCKS5

Best For: Quick, temporary scraping tasks.

How to Use:

import requests

proxies = {

'http': 'http://45.61.139.48:8000',

'https': 'http://45.61.139.48:8000'

}

response = requests.get("https://example.com", proxies=proxies)

print(response.text)

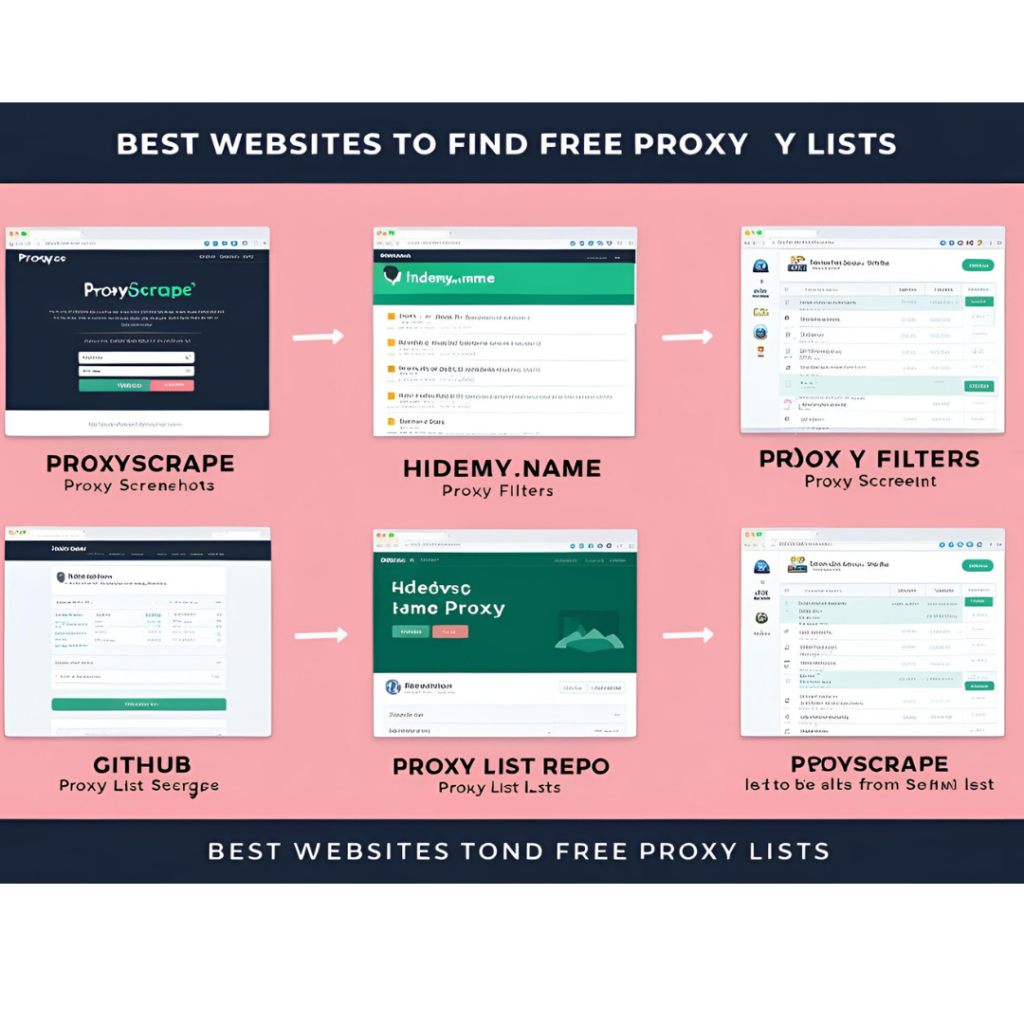

2. ProxyScrape

🔗 https://www.proxyscrape.com/free-proxy-list

Why It’s Great:

✅ Huge database (thousands of proxies)

✅ API access for automated scraping

✅ Filters by anonymity, protocol, and uptime

Best For: Developers who need an API to fetch fresh proxies.

Example API Call:

import requests

proxy_api = "https://api.proxyscrape.com/v2/?request=getproxies&protocol=http"

proxy_list = requests.get(proxy_api).text.split('\r\n')

print(proxy_list[:5]) # First 5 proxies

3. HideMy.name Free Proxy List

🔗 https://hidemy.name/en/proxy-list/

Why It’s Great:

✅ High anonymity proxies

✅ Detailed speed and uptime stats

✅ Export as CSV or text

Best For: Manual testing and small-scale scraping.

Tip: Sort by “Response time” to find the fastest proxies.

4. Spys.one

🔗 https://spys.one/free-proxy-list/

Why It’s Great:

✅ Real-time updates

✅ Advanced filtering (e.g., elite vs. transparent proxies)

✅ SOCKS5 support

Best For: Users who need fine-grained proxy filtering.

Warning: Some proxies may be unstable—test before use.

5. GitHub Proxy Lists

🔗 https://github.com/clarketm/proxy-list

Why It’s Great:

✅ Community-maintained (updated regularly)

✅ Simple text format for easy parsing

✅ Includes elite (high anonymity) proxies

Best For: Developers who want an auto-updating list.

Example Usage:

import requests

proxy_url = "https://raw.githubusercontent.com/clarketm/proxy-list/master/proxy-list.txt"

proxies = requests.get(proxy_url).text.split('\n')

print("Available proxies:", len(proxies))

How to Test Free Proxies Before Use

Not all proxies work. Always test them first:

import requests

def test_proxy(proxy):

try:

response = requests.get(

"http://httpbin.org/ip",

proxies={"http": proxy, "https": proxy},

timeout=5

)

print("✅ Working proxy:", proxy)

return True

except:

print("❌ Dead proxy:", proxy)

return False

test_proxy("45.61.139.48:8000") # Replace with your proxy

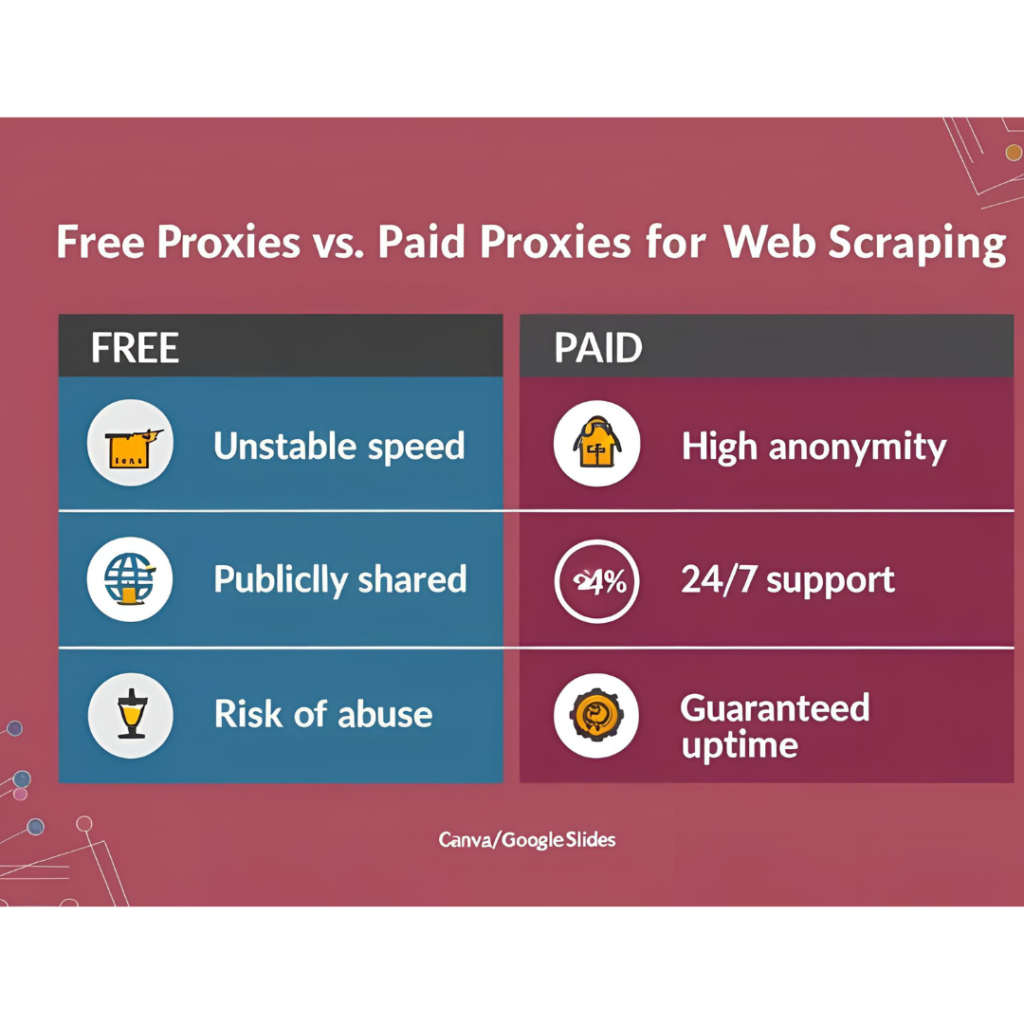

When Should You Upgrade to Paid Proxies?

Free proxies are great for testing, but if you need:

✔ Higher reliability (fewer dead proxies)

✔ Better speed (low-latency connections)

✔ Geo-targeting (US-only, UK-only, etc.)

Consider paid options like:

Bright Data (Best for enterprises)

Smartproxy (Affordable & reliable)

Oxylabs (High-performance scraping)

Final Thoughts

Free proxy lists are useful for small projects, testing, or learning, but they come with risks. If you’re doing serious web scraping, invest in paid proxies for better performance and security.

Further Reading

Have you used free proxies before? Share your experiences below! 👇

It’s best to participate in a contest for among the best blogs on the web. I will suggest this website!

I visited various sites however the audio feature for audio songs

present at this site is actually excellent.